Talks about being on call are usually met with complaints. Here's how to alter the narrative and develop a stronger, more compassionate process.

A few years ago, I took oversight of a significant portion of our infrastructure. It was a complex undertaking that, if not managed and regulated properly, could have resulted in major disruptions and economic consequences over a large area. For these kinds of projects, you need well-equipped engineers who understand the dependencies and systems, as well as a robust team that is available for emergencies during off-peak hours.

In my experience, I came across a well-intentioned staff that was unprepared for the erratic nature of on-call work. My intention was to transform the on-call experience from a constant source of anxiety into a model of stability.

Being on call might cause anxiety: According to the Hones, being on call is often one of the most stressful aspects of a developer's job and a significant socio-technical challenge. This idea stems from a few basic problems that could make being on call particularly difficult.

Unpredictable work schedule: Engineers must be available to respond to emergencies after hours in order to be deemed on-call. Interference with personal time results in an unstable work-life balance.

High stakes and high pressure: Managing failures that could significantly affect corporate operations is a requirement of being on call. Because of the stakes, there can be a lot of tension. After work, handling challenging issues on your own increases the feeling of loneliness.

Lack of preparation: If engineers do not obtain the required training, preparation, and experience, they will feel unprepared to handle any issues that may arise. This exacerbates the issue by instilling anxiety and a dread of making the incorrect decisions.

Alert fatigue: When non-critical alerts are received frequently, engineers may become desensitised to them, which may make it more difficult for them to recognise significant issues. Increased stress, slowed reaction times, the failure to notice critical alerts, and reduced system reliability could arise from this. This causes a general dissatisfaction with their work and hinders their ability to take swift action when an actual issue does occur.

Despite its challenges, being on call is essential for maintaining the stability and health of production systems. Someone needs to cover your services during off-peak hours.

Being close to production systems is essential for on-call engineers since it ensures:

Our transformation started with simple, fundamental first steps.

Creating a Checklist for Before Being On Call:

A pre-on-call checklist is an easy yet efficient way to ensure engineers have completed all necessary activities before starting their shift. It prevents errors, reduces the likelihood of being unprepared, and promotes a proactive approach to incident management. The checklist we developed consisted of the following categories, each with specific assignments:

After the engineers felt at ease using the pre-on-call checklist, we added them to the rotation. We warned them that while they understood the challenges in theory, handling a real crisis could be more complex and demanding.

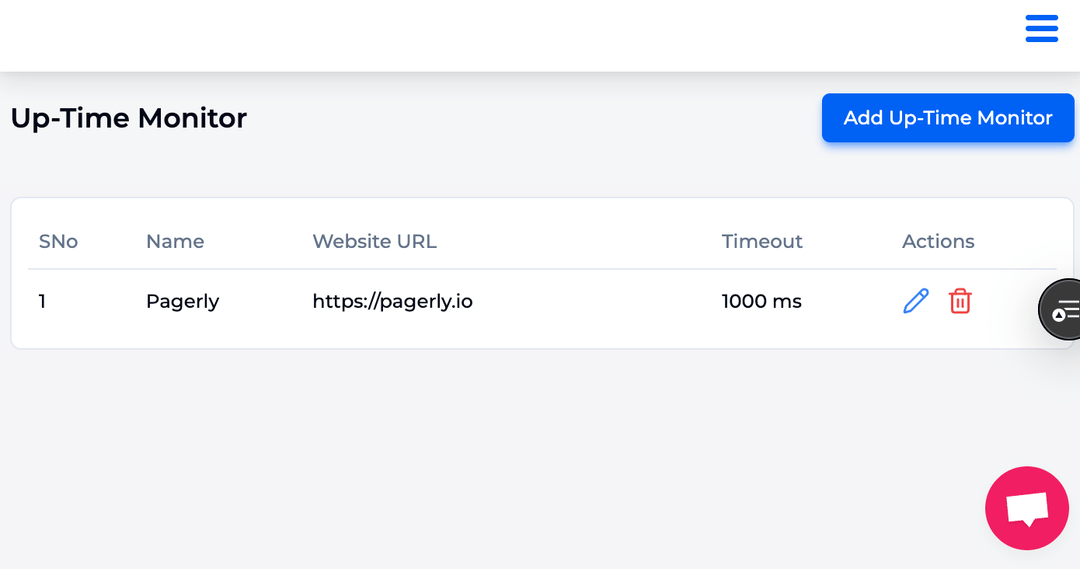

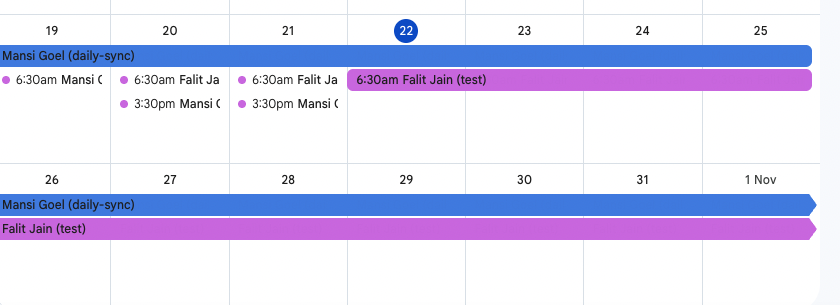

Lastly, ensure that employees are accurately included in any payment systems your organization may have for on-call engineers. Pagerly is an excellent resource for exploring various on-call payment options.

We introduced the "Wheel of Misfortune," a role-playing game inspired by Google's Site Reliability Engineering (SRE) process. The idea is simple: we simulate service disruptions to assess and improve on-call engineers' response times in a secure environment. Some of these drills are conducted to help teams become better prepared to deal with real-world catastrophes.

In a 2019 post, systems engineer Jesús Climent of Google Cloud wrote, "If you've ever played a role-playing game, you presumably already know how it works: A scenario is run by a leader, such as the Dungeon Master, or DM, in which certain non-player characters encounter the players, who are the ones playing, in a predicament (in our example, a production emergency) and engage with them."

It's a useful method to ensure that engineers can confidently and skilfully handle significant yet unusual events. Here are the steps to enhance on-call readiness -

Making sure we have the right data for helpful dashboards and alerts was the next step. This was important because having relevant and reliable data is essential for effective monitoring and incident response. We thoroughly investigated the accuracy of our initial assumptions about what makes "good" dashboards and alerts. Verifying the quality and relevance of data helps ensure that warnings are reliable indicators of actual issues and avoid false positives.

An efficient on-call system depends on its ability to record and analyze important business KPIs or system performance data from incidents. We ensured that each service had the proper metrics, routinely logged them, and maintained a dashboard for quick overviews.

As alert fatigue is a common issue with on-call systems, we revamped our monitoring protocols to address it:

After the team developed a thorough understanding of our deployments, flow, and health data, we began searching for further areas to optimize.

We started optimizing the areas that needed more effort or had knowledge gaps as soon as things normalized.

As soon as the firefighting stops and you have some breathing room, you should start aggressively investing in SLOs (Service Level Objectives) and SLIs (Service Level Indicators). They are the only way your team can begin behaving proactively and cease always responding to everything that happens. SLOs, such as 99.9% uptime, outline the ideal degree of service dependability. specified uptime %s, or SLIs, are metrics that show you how well you're achieving specified objectives.

By creating and adhering to SLOs and SLIs, your team may cease patching problems all the time and focus on achieving predefined performance goals.

The engineering team has the responsibility to be available and accountable for their code, while management is obligated to ensure that on-call work is done effectively. There is reciprocity in this relationship.

Engineering executives can showcase successful on-call strategies by examining how they organize and prioritize their systems. Your company’s previous successes and specific needs will determine your on-call strategy. When adjusting your plan to meet the needs of your team, consider your own requirements and what you can reasonably do without.

By being proactive, you can maintain stability while also raising the bar for operational resilience. However, this approach requires ongoing maintenance, care, and continuous improvement. The good news is that a practical solution is within reach.